Realization of High-Speed Robot Manipulation for Variety Parts by Data Abstraction and Simplified Control

- Robot

- Automation

- Visual Feedback

- Tactile Feedback

- Manipulation

In recent years, the cost of labor for high-quality workers has been rising in Japan and other countries that have shifted from manufacturing to services, and there is a growing need to automate robots in medium- to low-volume production site, which has been done mainly by hand. Therefore, we focused on the kitting process, which is essential in the pre-assembly process of medium- and low-volume production, and examined the feasibility of robot technology that can (1) perform at a speed equivalent to or faster than that of a human, (2) adjust its position and orientation, and (3) arrange various parts in a predetermined location, so that it can replace human workers without reducing productivity. Among the issues with existing technology, the hardware issues were addressed by improving our existing hand. In this paper, we define the abstract data required for robot task execution and an architecture that automatically converts the sensor data acquired by the robot into abstract data for control in order to solve the software problem that requires support for various parts and increases the human resources required for programming. This paper introduces a high-speed handling method using a robot that can complete tasks without increasing the programming effort. We also report the construction of an actual kitting system using our method and the achievement of (1) to (3).

1. Introduction

Conventionally, industrial robots have been mainly utilized for repeated work that requires accuracy and efficiency, such as painting and welding in the automobile industries. On the other hand, people have been mainly engaged in work in which they are required to work according to the environments, which involved the handling of various types of objects and the correction of position errors. In recent years, in Japan and other countries that have shifted their businesses from manufacturing to services, the labor costs of high-quality workers have been rising sharply, which led to the introduction of industrial robots in the processes of small-variety, large-volume manufacturing sites handling products with similar shapes, such as the boxing process of completed products at shipping sites and alignment of food in food factories. Going forward, it is expected that such robots will be applied in the medium- and low-volume production sites. Under such circumstances, this study focused on the kitting process required as the preprocess of assembling medium- and low-volume production digital devices and considered the feasibility of automation. In order to replace humans with robots and maintain productivity, robots are required to (1) perform at a speed equivalent to or faster than that of a human, (2) adjust the position and orientation (of parts), and (3) arrange various parts in a predetermined location (hereafter placing location).

Conventionally, jigs have been designed according to the shapes of workpieces in order to handle them at a speed equivalent to or higher than that of a human1). Moreover, in order to autonomously adjust (correct) the position and orientation according to work, feedback control using sensors has been considered2). However, either method does not realize the handling of work while achieving both (1) and (2) in correcting position and orientation errors at a speed equivalent to or higher than that of a human. On the other hand, in the research field of software robotics, contacts with environments are actively utilized. Karako and his study team demonstrated that assembly tasks were executable at a speed equivalent to or higher than that of a human by using a technique of this field for estimating and correcting errors in positions and orientation by utilizing contacts with the environment3). However, since this technique only supports the tasks of inserting ring-shaped workpieces into axes, it does not support a variety of workpieces and placing methods.

The purpose of this study is to improve compatibility with various workpieces by referencing the study by Karako and his study team3) as a previous study, where position and orientation errors were estimated and corrected at a speed equivalent to or higher than that of a human. Issues that need to be solved to support the various workpieces are (a) realization of hardware supporting the various workpieces and (b) the reduction of labor costs that are increased by implementing control programs separately for each workpiece. As for issue (a), we responded to the issue by improving the hand developed in the previous study3) for a variety of workpieces. The issue (b) was resolved by hierarchizing sensor data obtained during kitting and defining an architecture that outputs highly abstract data common to kitting tasks and independent of workpieces or orientation, and then generating command data for arm hands based on the abstract data independent of the workpieces or orientation. Furthermore, we built a kitting system using solution techniques (a) and (b) and confirmed that it could place various parts in predetermined locations while adjusting their positions and orientation at a speed equivalent to or higher than that of a human.

This paper reports mainly about solution technique (b). Chapter 2 describes about the abstraction technique for sensor data that is compatible with a variety of workpieces and realizes a control system that autonomously corrects errors in positions and orientation at a speed equivalent to or higher than that of a human, along with a control system that uses this abstract data. After describing the hardware used as a basis for building a kitting system along with its operation strategy, as well as abstract data that was actually implemented and the system in Chapter 3, evaluation results for the kitting work obtained by using the built system are given in Chapter 4. Then, a summary and future prospects are given in Chapter 5.

2. Proposed Techniques

In this study, in order to correct position and orientation errors at a speed equivalent to or higher than that of a human, which is a requirement for kitting, we work on the remaining issue of improving compatibility with a variety of workpieces while adopting the technique developed by Karako and his team3) for estimating and correcting errors in positions and orientation by utilizing environments. The issue of handling the various workpieces is divided into the issue of realizing hardware that can grip the various workpieces and the issue of realizing software for implementing and adjusting control programs for each workpiece and placement location. The first hardware issue was resolved by adding functions to a hand already developed (see Chapter 3 for details). This chapter mainly describes about the latter issue, implementation, and adjustment of control software.

2.1 Policies

In the previous study3), control programs were implemented for each workpiece and placement location because the data used depended on workpieces and placement locations. Therefore, we decided to convert data into abstract data required for automatic execution of kitting that does not depend on workpieces or the work itself and to perform control using this abstract data, rather than directly handle raw data, such as data of the various sensors equipped on the robot and angle data of the arm and hand motors so as to execute kitting work with the same control program even when the workpiece or placement location changed.

2.2 Definition of Requirements for Abstract Data

We define the requirements for abstract data, which are important for the policies set in Section 2.1 based on the following requirements for automation of kitting.

- Requirement 1. The arm has to autonomously correct the positions and orientation according to the workpiece and placement location.

- Requirement 2. The hand has to grip the workpiece stably without dropping it even during fast work.

- Requirement 3. The robot has to complete kitting within a time equivalent to the time required by a human.

2.2.1 Abstract Data Derived from Requirement 1

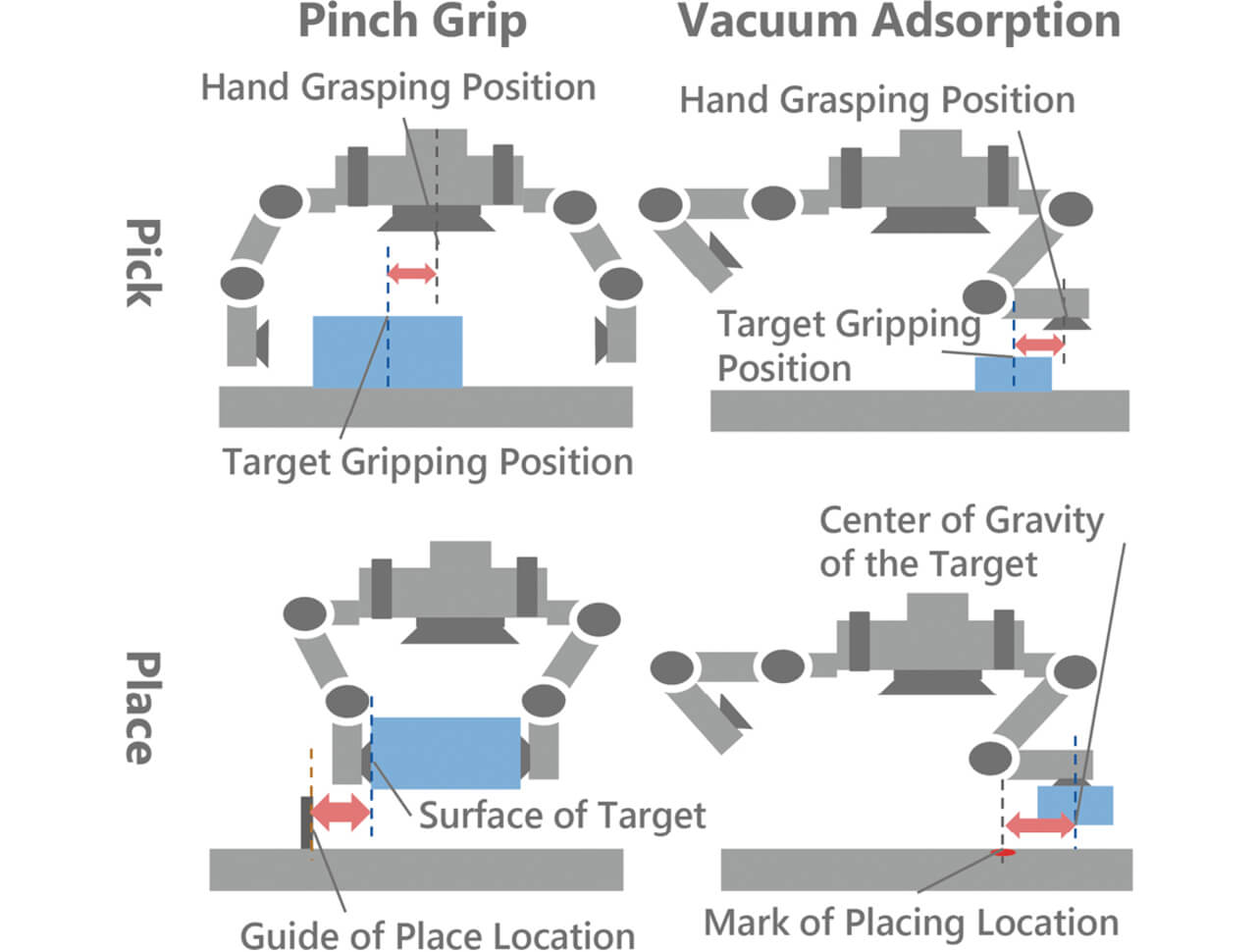

As shown in Fig. 1, the arm is controlled to adjust the positions and orientation to the hand grasping position and target gripping position so that the workpiece can be gripped in the hand grasping position (not necessarily the same as the center of the hand) given by the upper-level system. At the time of placement, the hand gripping the workpiece is regarded as an integrated hand including the workpiece, and the arm is controlled to align the planes, edges, corners, or the center of gravity with the positions and orientation of the guides and marks in the placement locations. This control enables the correction of errors in the positions and orientation using the same control without creating individual programs for picking and placing. Moreover, because (b) the finger height changes when the finger is moved, the arm needs to follow the change in height.

In order to align the positions and orientation at the time of (a) picking and placing, information concerning the positions and orientation obtained from the upper-level system and sensors is used. An example of the sensors used is a sensor that can measure the position and posture error between two objects

Then, finger angle information

2.2.2 Abstract Data Derived from Requirement 2

When the workpiece is gripped by multiple fingers, the external force received by the fingers from the workpiece needs to be generated in the normal line direction at the contact point/surface as the most stable condition with the allocation of fingers4). If the workpiece is gripped with a single finger by adsorption or other means, the state where the workpiece is adsorbed at the target gripping position in the gripping position specified by the upper-level system is the stable gripping state. In either case, if it is not gripped stably, the workpiece tilts against the finger, causing inconsistency of the force received at the finger surface portions (e.g., between the fingertip and finger base) of each finger.

Therefore, in order to understand whether the hand grips the workpiece as expected or not, inconsistency of force applied to the finger surface of finger i is observed. If there is no inconsistency, the value is 0, and if there is inconsistency, the value increases according to the scale of the inconsistency. Since this value equivalent to force applied to the finger surface of finger i

As described at the beginning of this section, when the workpiece is gripped by multiple fingers, it is necessary to sense more than a certain level of force applied to each finger. This external force received by finger i should be the value that increases and decreases depending on the external force received by the finger and reaches 0 when there is no external force. Furthermore, since the value is controlled to barely exceed 0, it functions even when several dozen percent of the scale error is included in the assumed force; therefore, it does not require precise calibration of sensors. Since this external force to the finger i

2.2.3 Abstract Data Derived from Requirement 3

Humans work efficiently by using each finger independently while moving their arms, and depending on work, they move the arms and fingers in coordination. This system also adopts a similar mechanism. Therefore, command values are input separately for the arm and the hand so that they can operate independently. Moreover, their respective degree of achievement of work is output in order to wait as necessary until the work of the other is achieved and then start the next work in coordination. For both the arm and the hand, we took particular note of the fact that speed is reduced as the current value changes nearer to the target control value, and the absolute value of arm speed

2.3 Configuration of Control System Based on Proposed Policies

2.3.1 Realization of a Task

Feedback control is performed using the abstract data of Requirements 1 and 2 described in Section 2.2. This control does not depend on the workpieces or the work and the movement of arm and hand stop when they reach the target state.

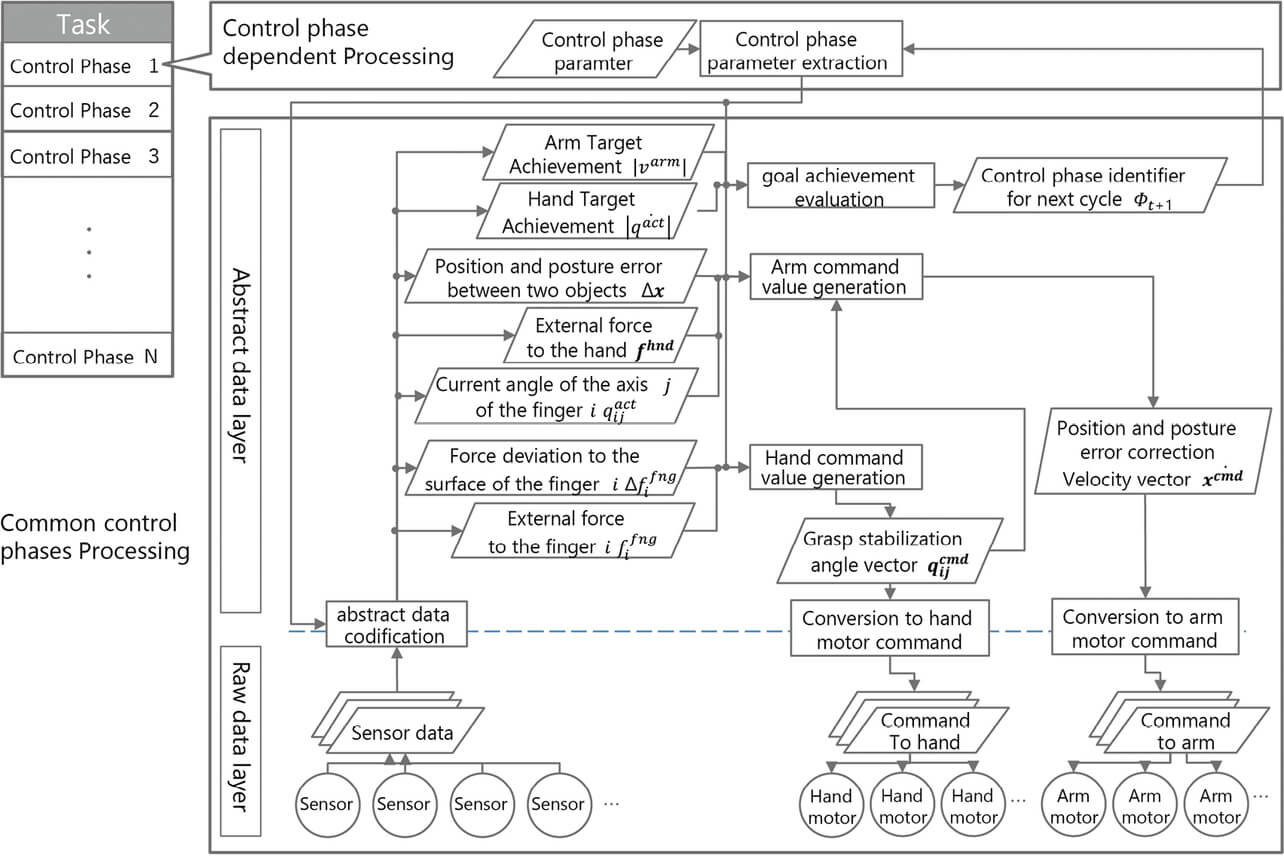

Since this suspension of movement means the same as the fact that the abstract data of Requirement 3 reaches ŌĆ£0,ŌĆØ the judgment of achievement to the target state is performed based on the abstract data of Requirement 3. This control realizes individual operations comprising kitting, such as moving the arm to the workpiece location and gripping the workpiece. These individual movements are called control phases in this paper. By preparing multiple control phases and executing them in order, tasks, such as kitting, are achieved (left side on Fig. 2).

As shown on the right side of Fig. 2, the processing of each control phase is realized by substituting the parameters, depending on the control phase (details are described in section 2.3.4), into the processing common to all control phases. The processing common to control phases consists of the following processes: based on the policies in Section 2.1, data in the raw data layer output by the sensors is converted into the data of the abstract data layer. Command values are generated in the abstract data layer and converted again into the command values of the raw data layer, which is sent to the motors of the arm and the hand. While the raw data layer processes the data of the sensors and that handled by the motors of the arm and the hand, the abstract data layer processes the data not depending on the workpieces or the work.

2.3.2 Generation of Command Values

In the control phases, raw sensor data output by the sensors is converted into the abstract data that meets the requirements described in Section 2.2 by the abstract data codification function. The abstract data codification function defines the equation for calculating the abstract data that meets the requirements described in Section 2.2 using sensor data. (For the definition of abstract data in the kitting system created in this study, refer to Section 3.3.)

The command value input to the arm position and posture error correction velocity vector

Here,

The generation equation of

The generation equation

Moreover, the member ave () represents the average value of the fingertip speed of each finger i , and it is the member for keeping the fingertip position constant by following the positional changes caused by the picking operation using the fingers. If it is not necessary to keep the position constant when the fingertip is moved,

Here,

The unit of command values required to operate each axis motor of the arm and hand needs to be converted from these command value vectors in accordance with the interface of the motor drive in use.

2.3.3 Realization of Coordinated Execution of Arm and Hand

Since the arm and the hand need to be moved independently, command values to the arm and hand are output simultaneously. On the other hand, the abstract data codification function outputs arm target achievement

2.3.4 Parameters per Control Phase

The control phase parameter is data that characterizes the control phase to be able to execute operations, such as moving the arm to the workpiece location and gripping the workpiece. In this technique, the user or the upper-level system sets this parameter only. Among this type of parameters are target value

The control phase parameter extraction function outputs the corresponding control phase parameters to each function, such as the abstract data codification and target achievement evaluation function based on the current control phase.

3. Realization of a System

In this study, the control system proposed in Chapter 2 was implemented, and the kitting system that operates at a speed equivalent to or higher than that of a human was built. This chapter describes this system.

3.1 Prerequisite Hardware

This section describes the equipment used to realize the system.

3.1.1 Flexible Hand Equipped with Adsorption Mechanism and Multiple Sensors

In order to build a kitting system that operates at a speed equivalent to or higher than that of a human, we created an improved hand by adding the following functional requirements to the hand developed in the previous study3).

- 1. To be able to grip a variety of workpieces by using one hand.

- 2. To be able to recognize relative position and orientation errors of the workpiece against the hand in each state of gripped and not gripped in order to calculate abstract data that meet requirements in Section 2.2.

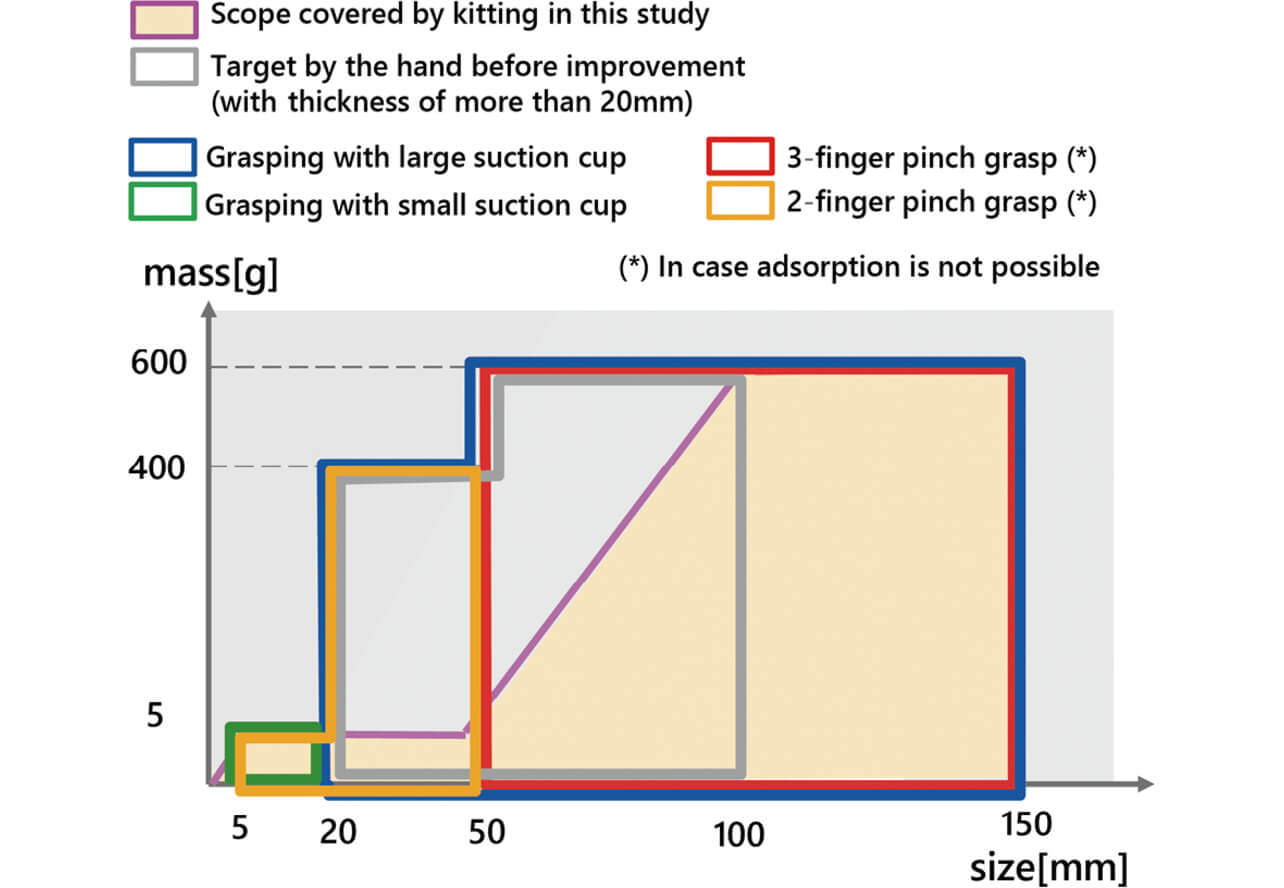

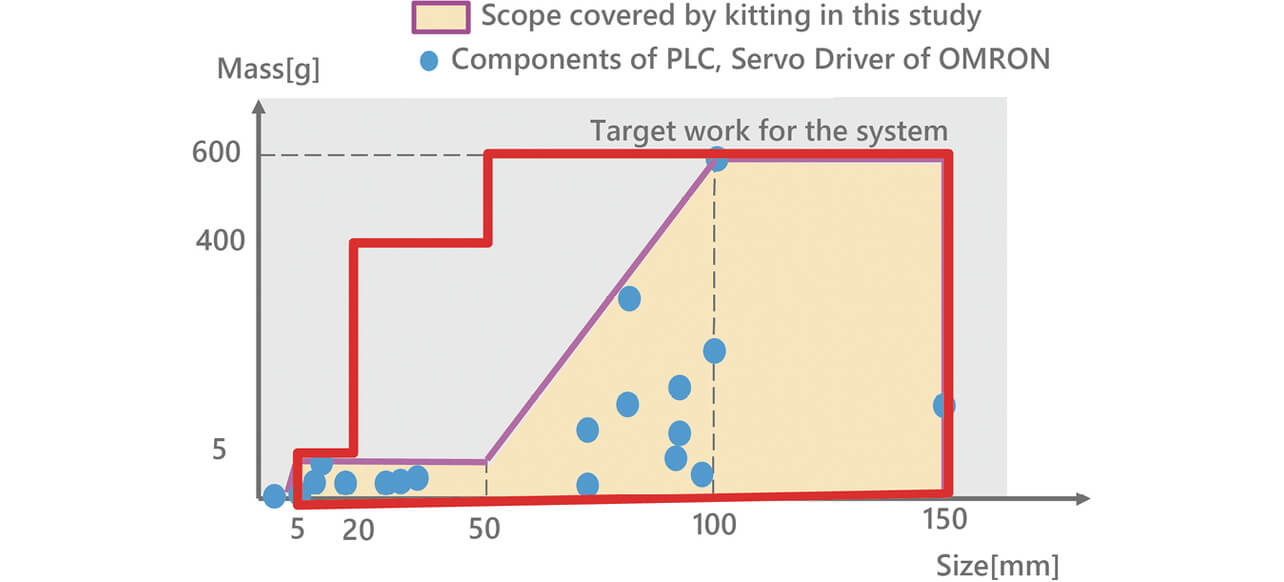

In order to meet the first requirement, we attached an adsorption mechanism with adsorption pads in various sizes to each finger so that workpieces in the scope indicated by Fig. 3 can be gripped by the four types of gripping methods.

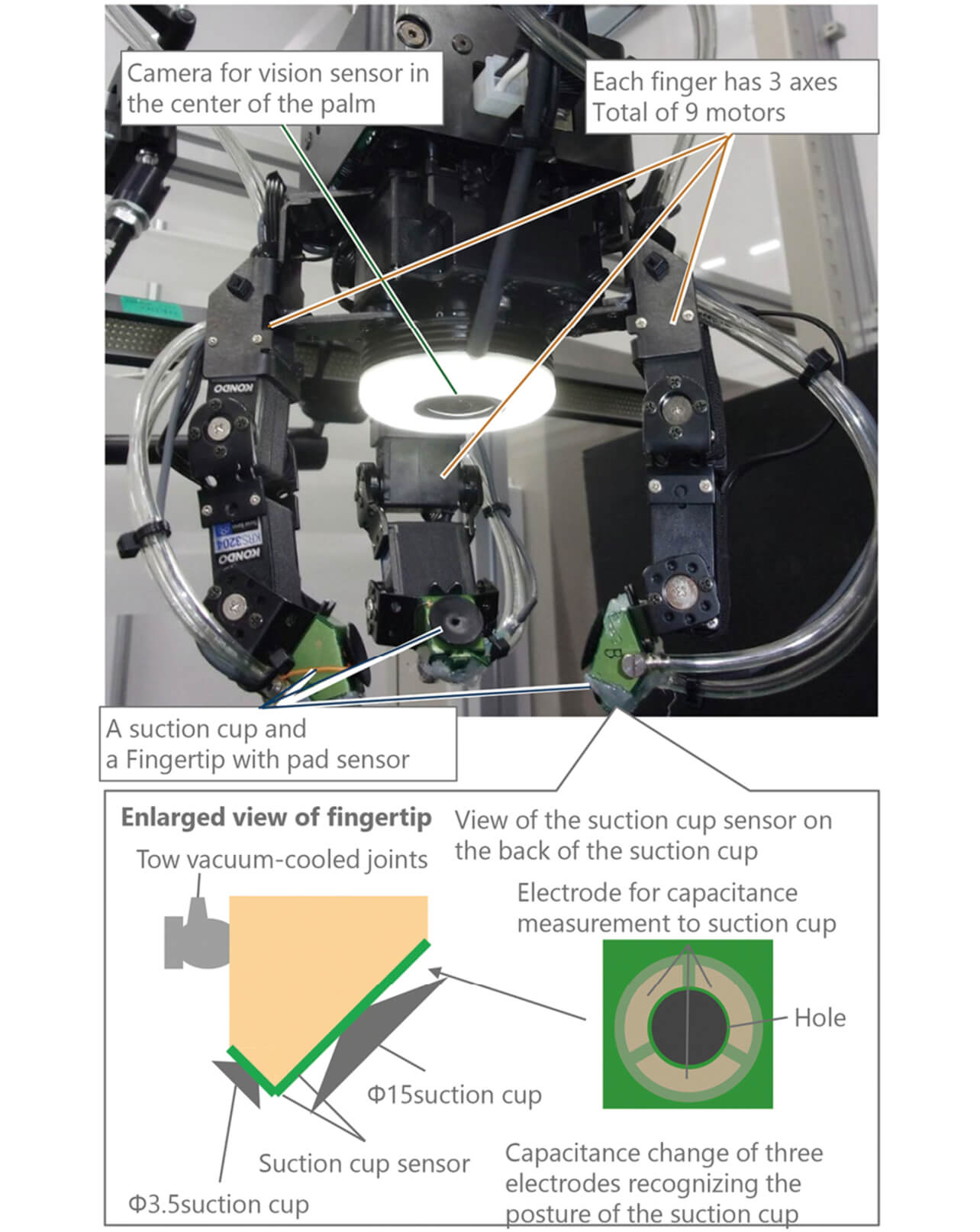

Then, in order to meet the second requirement, we attached a vision sensor camera at the center of the palm, and adsorption pad sensors5) that could retrieve the amount of change in total of 3 degrees of flexibility (one axis for the pressing amount and two axes for the orientation) based on the changes of the electrostatic capacity (Fig. 4). This allowed for retrieval of position and orientation errors against the workpiece in each condition of non-gripped/gripped.

3.1.2 System Configuration Capable of Processing by ms Order

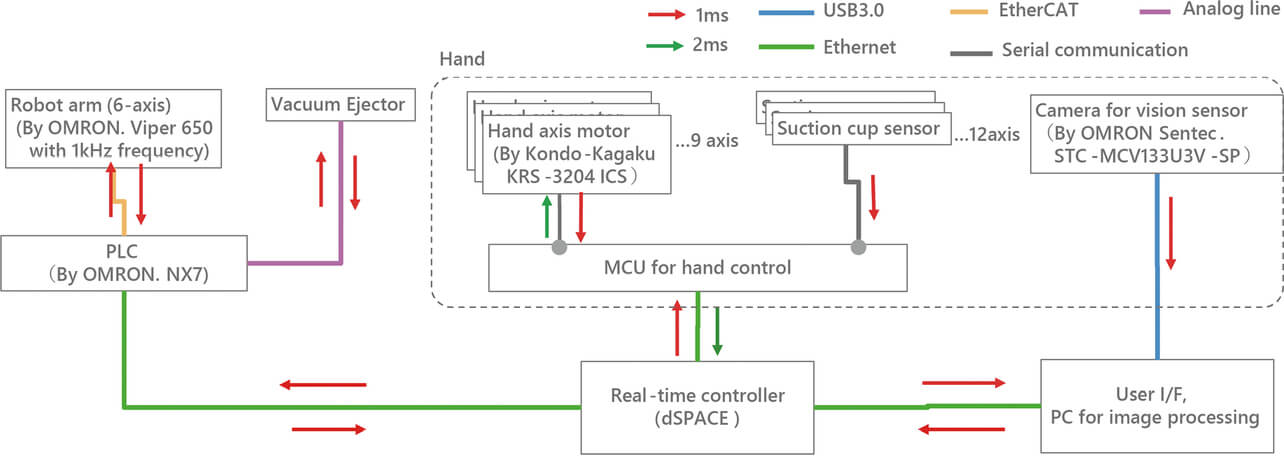

In the technique proposed by the previous study3), the control frequency was set to 1 ms in order to secure high speed of the system. Following this policy, we set the target value of control frequency to the ms order. Fig. 5 shows the completed system.

The system has the following three characteristics.

- 1. Since image data sent from the vision sensor camera has lots of data, it is processed on a dedicated computer connected via USB 3.0 and the amount of only the necessary characteristics is extracted within 1 ms, which is then sent to the real-time controller. Processing is completed within 2 ms in total, including latency.

- 2. The controller and microcomputer are connected by 100BASE-T Ethernet, and connection-free UDP is adopted for the protocol in the transport layer used at the time of sending/receiving data so that each process can demonstrate maximum throughput.

- 3. In order to increase the processing of microcomputer of the hand robot to the maximum level possible, pins are assigned to enable parallel processing of processing inside the motor after output of command value to the motor and that of microcomputer of the hand following the receipt of data from the sensor. Moreover, processing speed is increased by reducing the accuracy of sensor data to the required level. These ensure processing at the interval of 2 ms for each cycle.

3.2 Creation and Operation Strategy of Kitting Control Program

Each control phase in the tasks shown in Fig. 2 is the operation to achieve a single goal, such as lowering the arm until it comes into contact with the workpiece while moving onto the workpiece and adsorbing and holding the workpiece using adsorption pads. By setting the control phase parameters that enable such operations and setting the execution order of the control phases to the task, we created a control program to execute kitting.

Setting the execution order of these control phases means formulating the operation strategy of the robot. In this study, we adopted the approach of estimating the position and orientation errors and correcting them at high speed by utilizing the environment obtained in the previous study3) to formulate this operation strategy. In other words, we perform (1) impact mitigation upon contact between the fingertip and workpiece, as well as the workpiece and the environment, by adding passive deformation elements on the robot side and (2) estimation of kinematic relationship between the workpiece and the environment based on the observation of the deformation amount of the passive element that appears by the power balance between kinematic constraint of the workpiece and the environment and the passive element, and (3) high-speed position and orientation adjustment control utilizing (1) and (2). The newly developed hand has adsorption pads as the passive element, and the measurement of its deformation amount is performed using the adsorption pad sensor.

Use of these enables the hand to come into contact with the workpiece at high speed by relying on the passive deformation of the adsorption pads only even at the time of the gripping operation with uncertain workpiece height information. Moreover, when placing the gripped workpiece in alignment with the guide, it will be possible to make the workpiece come into contact with the guide at high speed, and by using the kinematic information of the workpiece and the guide obtained in advance, it will be possible to estimate the relative relationship of the position and orientation errors between the workpiece and the guide and to perform control to resolve misalignment based on the estimation results.

3.3 Definition of Abstract Data

This section shows the definition of each abstract data in the kitting system created in this study.

3.3.1 Position and Orientation Error between Two Objects

The position and orientation error between two objects

Here,

3.3.2 External Force to the Hand

The value equivalent to the external force received by the hand

Here, kp is the factor for converting the deformation amount of the adsorption pad to force,

3.3.3 Force Deviation  to the Surface of Finger i

to the Surface of Finger i

The value

Here, kfng is the factor for converting the deformation amount of the adsorption pad into force,

If one of the fingers is suppressing the others when gripping the workpiece with multiple fingers, the finger enters inside the target position and appears as position error

3.3.4 Other Abstract Data

- External force to the finger i

: Scalar quantity. The deformation amount pi of the adsorption pad measured by the adsorption pad sensor is used.

- Current angle of each finger

: Scalar quantity. Retrieved from the encoder of each axis motor of the hand.

- Degree of achievement of arm target

: Scalar quantity. The speed of fingertip of arm and is calculated using Jacobian matrix of the arm.

- Degree of achievement of hand target

: Scalar quantity. The average value of the absolute values of angular speed of each axis of the hand and is calculated using the angular speed retrieved from the motor.

3.4 Kitting System Built in This Study

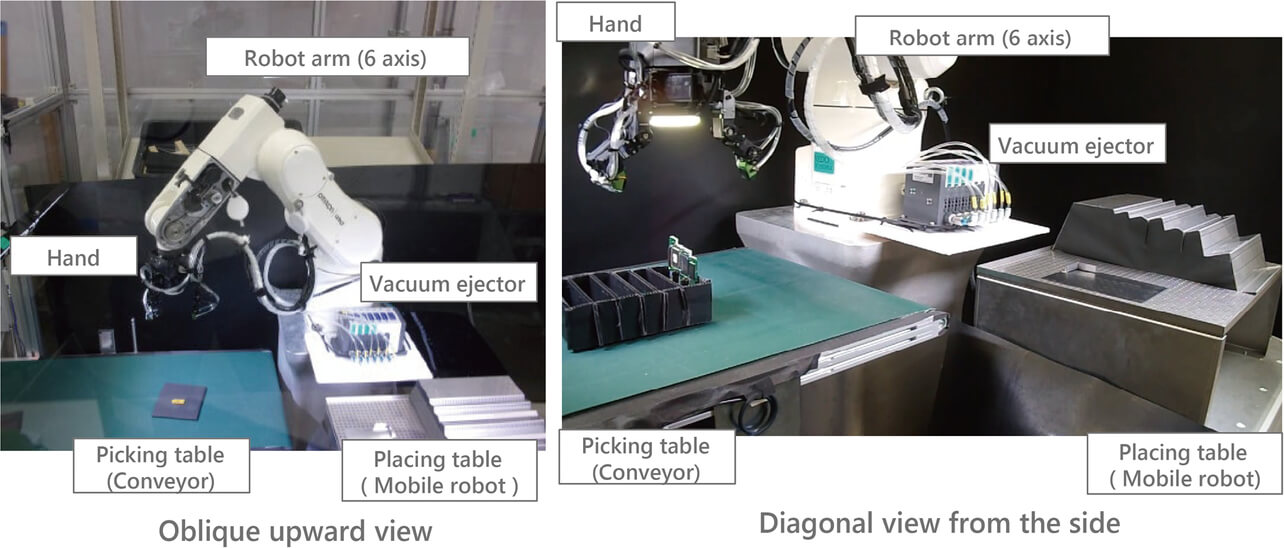

In this study, the calculation equation for abstract data, which was defined in Section 3.3, was implemented on the abstract data codification function shown in Fig. 2 to enable the control program created in Section 3.2 to be executed using the real-time controller of the system described in Section 3.1.2 to build the kitting system using the hand described in Section 3.1.1. Then, the mobile robot equipped with a conveyor for arranging the workpiece to be picked and the work storage area used as the placing location is arranged around the system (Fig. 6).

4. Evaluation

This chapter describes about the evaluation experiment conducted for the system built in Chapter 3. In the evaluation experiment, we confirmed the requirements for the kitting process, which are to (1) perform at a speed equivalent to or faster than that of a human, (2) adjust its position and orientation, and to (3) arrange various parts in a predetermined location. We chose a part that comprises the programmable logic controller (PLC) and a servo driver manufactured by Omron as the workpiece used in the evaluation on the assumption that it would be utilized in the internal manufacturing sites. Table 1 shows the target values used in the evaluation. The target value for responsiveness against position and orientation errors ┬▒10 mm was set as the maximum error between the part position given by the camera in advance, which is equipped outside the system for detecting parts, and the true part position. The value 1.5 degrees was set as the tilt with which height changes by 5 mm when the part moves by 20 cm in the horizontal direction and the maximum possible angle error that could be caused if a worktable is created using an aluminum or similar frame. The target value for the correction of any position error was set to be within 1 mm, which is expected to be no problem in the next assembly process. The target value concerning high speed was set to 4 seconds because the average and maximum values when the authors executed tests in the same environment were 3.8 and 4.6 seconds, respectively.

| Evaluation item | Target |

|---|---|

| 1. High speed | Kitting completed within 4 seconds per workpiece |

| 2. Responsiveness against position and orientation error | Even if there is difference (┬▒10 mm, ┬▒1.5 degrees) between the position and orientation information given to the workpiece and placing location and true information, the difference is autonomously corrected to be within 1 mm and kitting is completed |

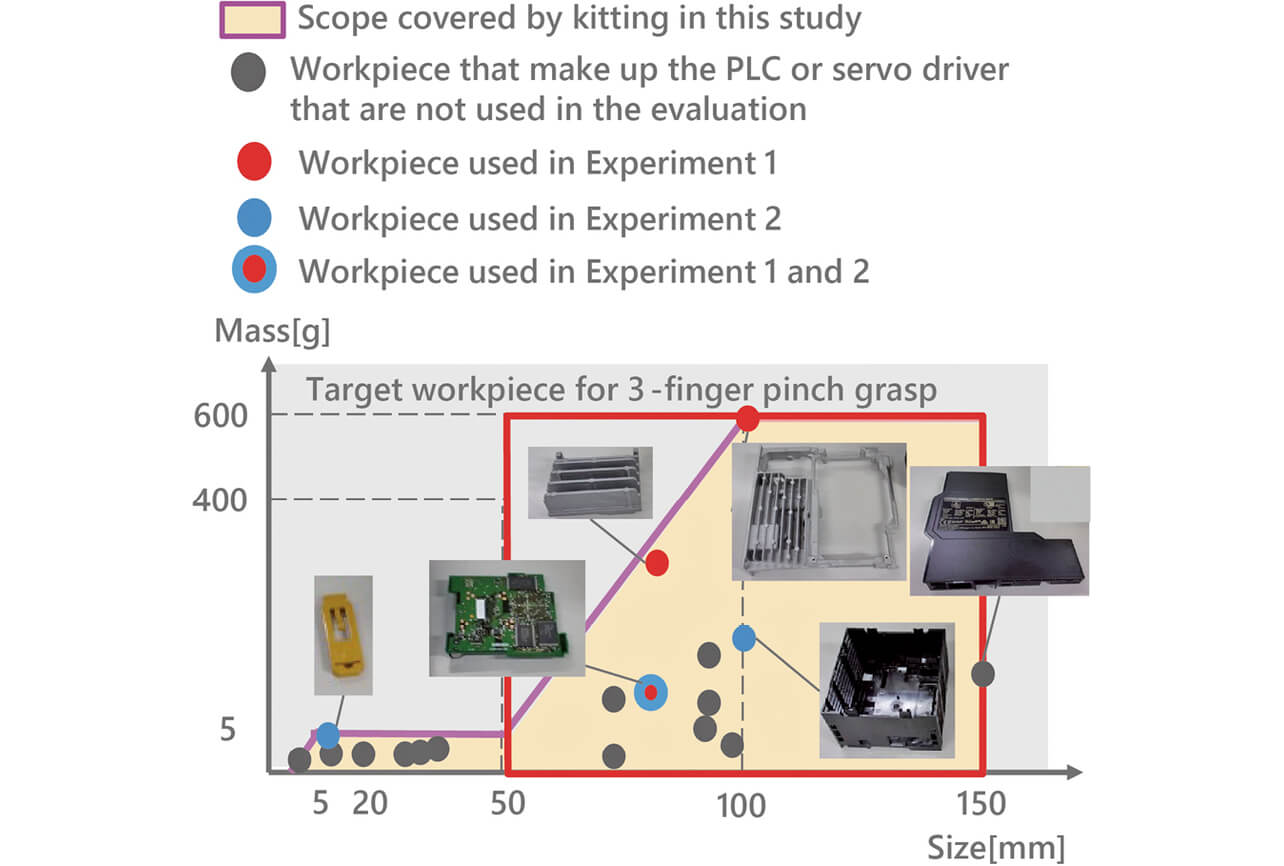

| 3. Compatibility against various workpieces | Compatible with workpieces within the red line in Fig. 7 |

In this study, two evaluation experiments were conducted to check each evaluation item as described below.

- Experiment 1. Experiment to confirm that the workpieces with different shapes and the same gripping method can be gripped with a single control program.

- Experiment 2. Experiment to confirm that kitting can be performed within 4 seconds by automatically correcting positions and orientation even if there is deviation from the position and orientation information given in advance by ┬▒10 mm and ┬▒1.5 degrees for workpieces with different gripping methods.

4.1 Experiment 1

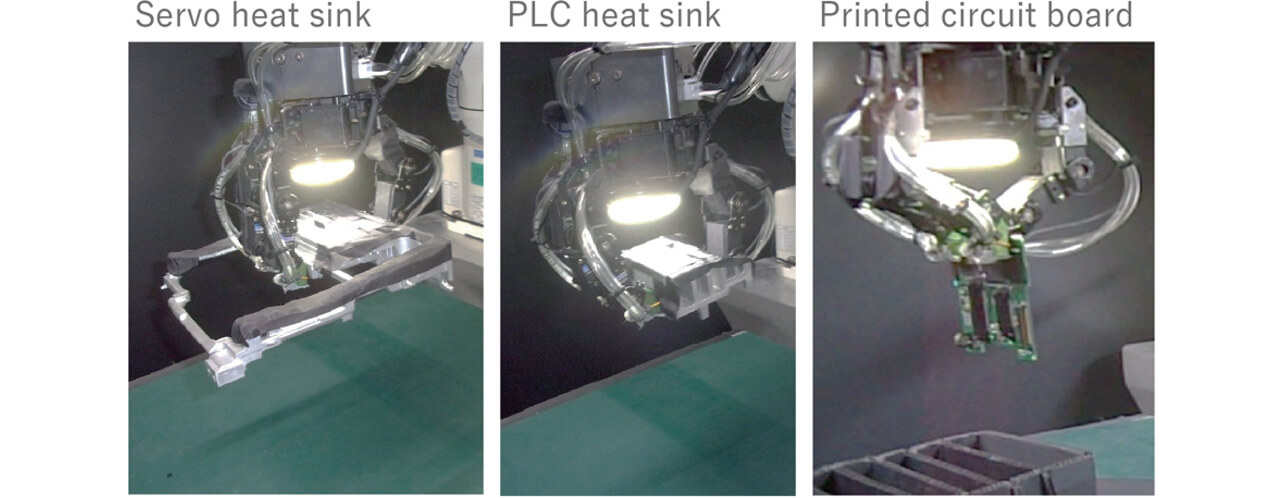

The hand used in this experiment could be used by four types of gripping methods. Among them, two gripping methods using adsorption were evaluated in Experiment 2. Moreover, the workpieces indicated in Fig. 8 did not include workpieces pinched by two fingers for gripping. Therefore, in Experiment 1, we evaluated the availability of gripping them by using three fingers and a single control program.

For workpieces used in this evaluation, we chose three workpieces that were difficult to grip stably; the heaviest one with biased center of gravity, the one with high density and weight, and the heaviest one among thin workpieces with rough surfaces, (Fig. 8). Since other workpieces were lighter with similar sizes, it was expected that the system could grip them if it could grip these three. Note that, although there was a workpiece not selected for this experiment, which was 150 mm in size at the boundary of the red line in Fig. 8, it was a thin workpiece that was difficult to grip by picking and was gripped by adsorption, therefore, it was excluded from the scope. As a result of experiment, we confirmed that the system could grip all the three workpieces as shown in Fig. 9.

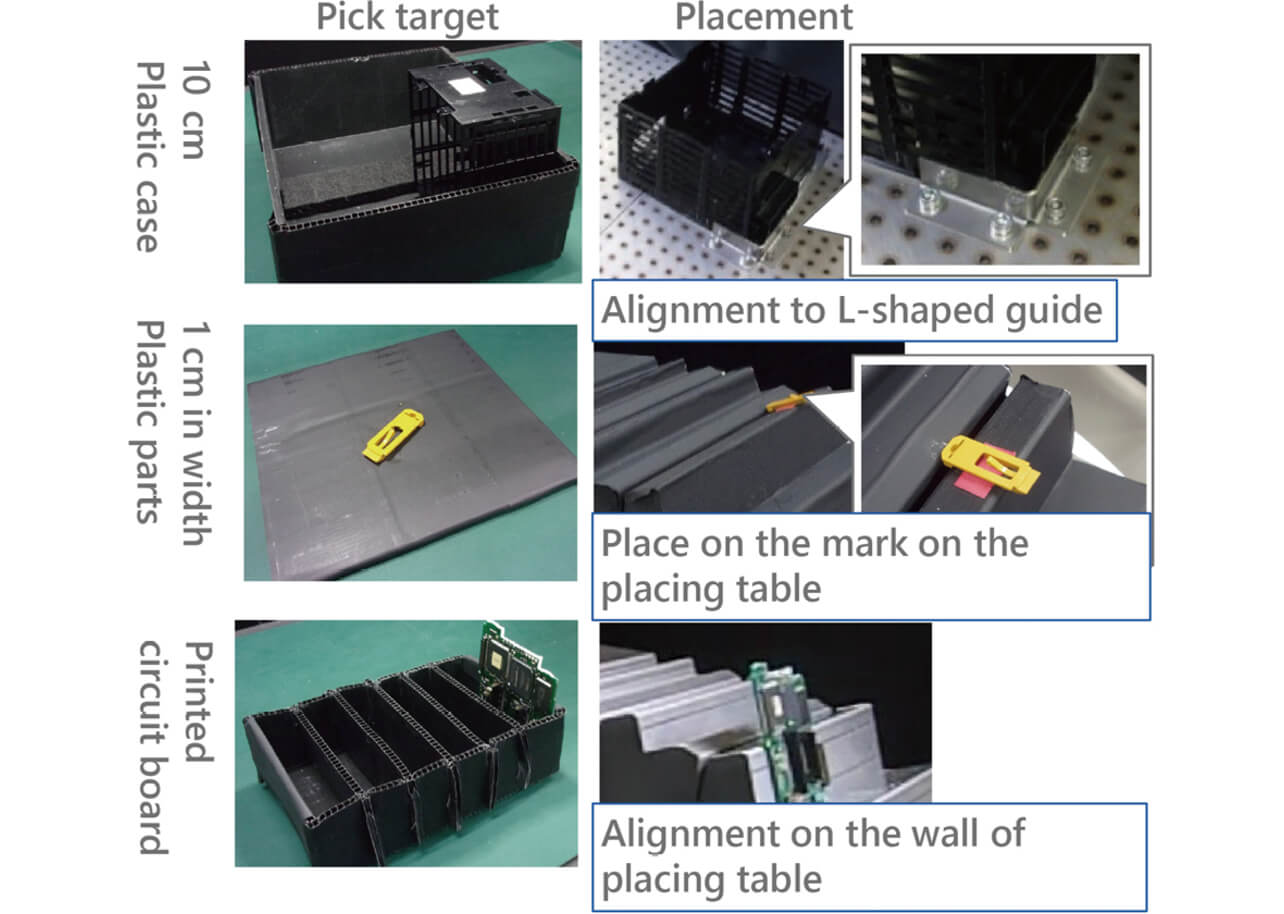

4.2 Experiment 2

Since the workpieces prepared for this study did not include workpieces gripped by picking with two fingers, we conducted the kitting evaluation experiment by using three gripping methods, except for picking with two fingers. If kitting was successfully performed on workpieces that were considered to be difficult to grip by each method, we considered kitting would be successfully performed for other workpieces as well. Therefore, we chose the workpieces shown in Fig. 10. While the 10 cm plastic case was gripped by adsorption using a large pad, because it has large height, the adsorbed portion was more likely to be affected by inertia, resulting in poor gripping stability. As for the 1 cm in width plastic part, since the portion that could be adsorbed shifted from the center of gravity, the moment caused by gravity was applied to the adsorbed portion, which also resulted in poor gripping stability. As for the printed circuit board, since there was no adsorbed surface on the surface part, it could only be gripped by picking. Moreover, since two fingers arranged in parallel with one another face the other remaining finger for picking, the two fingers suppressed the other, requiring control of power. Because of this, it was very difficult to grip the workpiece robustly and stably. As shown in Fig. 10, each workpiece was placed in the picking location assuming actual kitting and was arranged in line with the guide for placement by assuming the next assembly process. When performing this kitting process, with respect to the target coordinates for the picking location and placement location compared to the position where the workpiece was actually placed and the location where the guide actually existed, information with differences of +10 mm and +1.5 degrees and ŌłÆ10 mm and ŌłÆ1.5 degrees was given to the target coordinate for the picking location and placement location, respectively, so as to obtain the maximum amount of error correction. Under these conditions, picking and placing were performed five times each for the workpieces, and the absolute amount of the difference against the placement location and the execution time taken from picking to placement was measured.

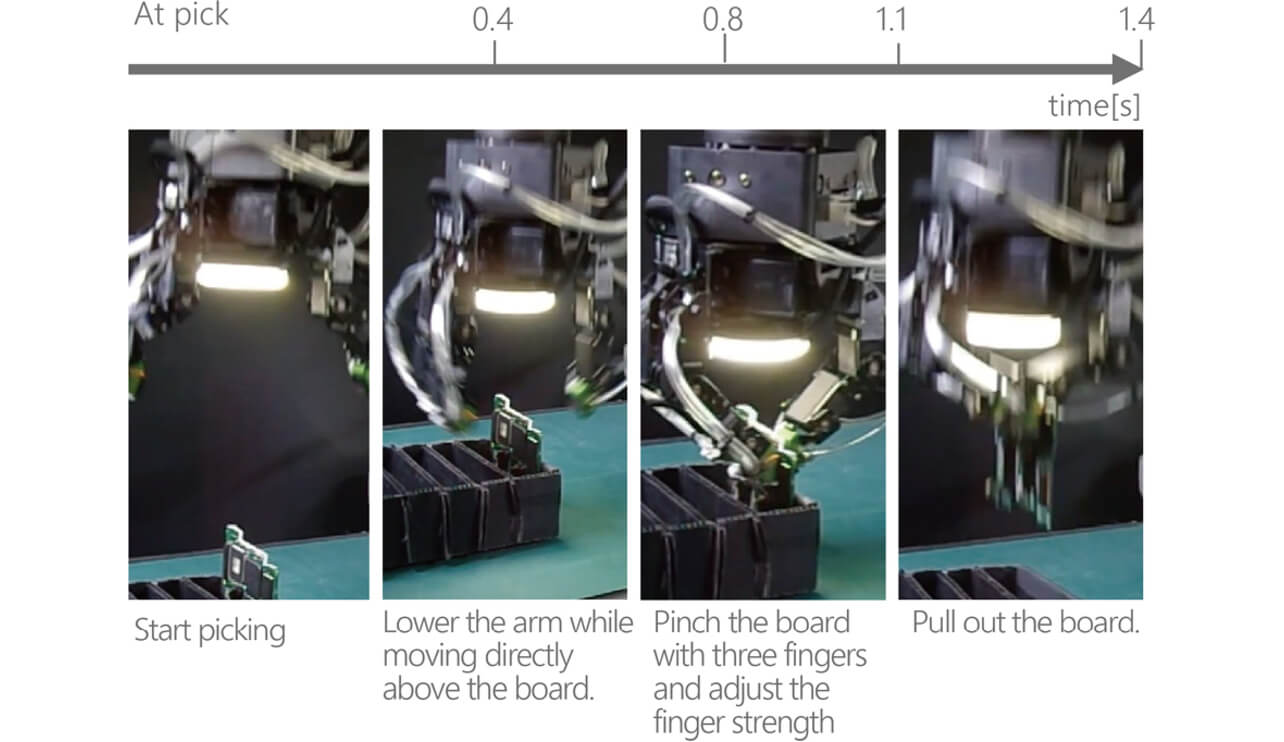

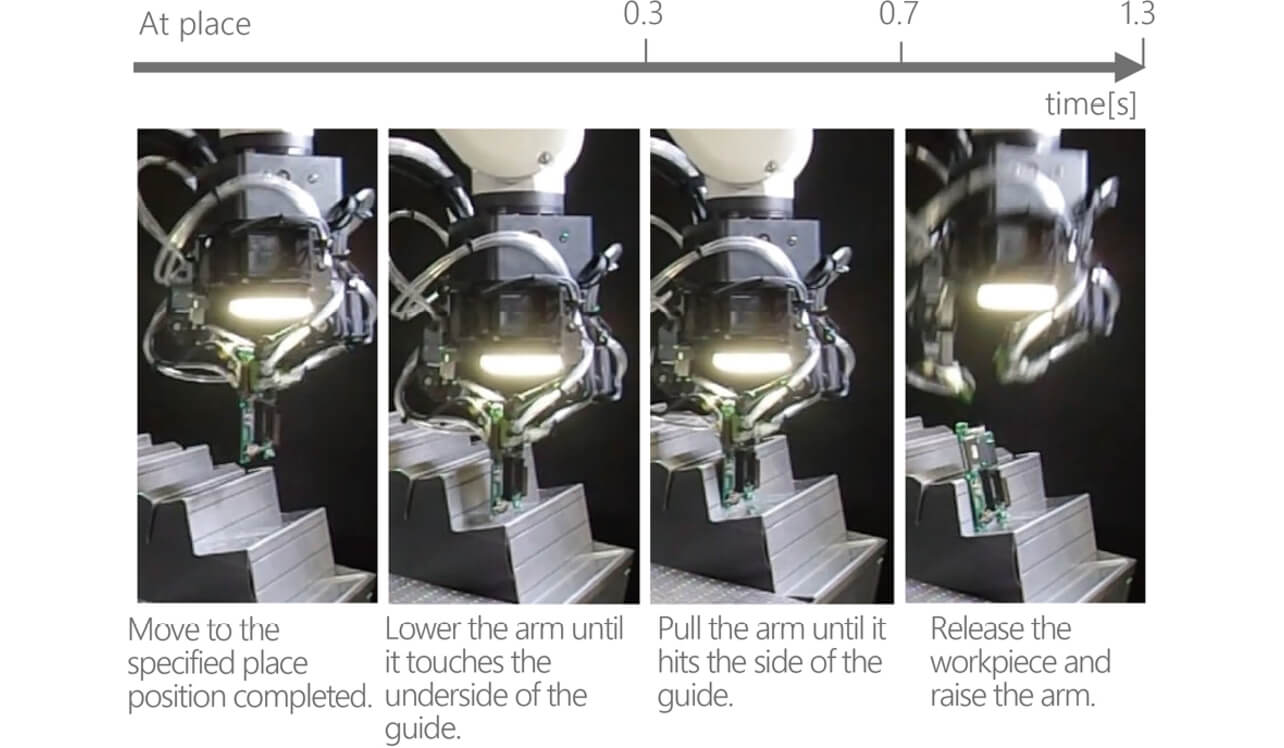

Fig. 11 and Fig. 12 show the processes of Experiment 2 conducted on the printed circuit board. As shown in Fig. 11, at the time of picking, the vision sensor on the hand allows it to grip the workpiece by autonomously correcting the position and orientation errors given before execution. Furthermore, the system autonomously corrects differences in the positions and orientation of the guide at the time of placement. As shown in Fig. 12, the arm was lowered until the system detected that the circuit board touched the bottom surface of the guide, and then, the arm was pulled forward until the system detected that the arm touched the side of the guide. Moreover, the guide was placed at an angle of 1.5 degrees, and when the circuit board touched the guide, because of the soft fingertip of the hand, the orientation error was corrected so that the guide surface came into contact with the surface of the circuit board, which was therefore placed with the intended orientation in the intended location.

As for the 10 cm plastic case and 1 cm width plastic part, in the same way, the position and orientation errors were corrected by using a camera at the time of picking to grip the workpiece. At the time of placement, the 10 cm plastic case was placed in the same way as the circuit board with the arm pulled until it detected contact with the guide to correct any position and orientation errors. The 1 cm width plastic part was placed while the position and orientation was corrected by aligning it with the mark on the guide using the vision sensor equipped on the hand.

The results of this evaluation experiment are shown in Table 2. Although the execution time for the 10 cm plastic case was within 4 seconds in the experiment, it was not possible to obtain the position error in the target range of within 1 mm. We confirmed that kitting tasks were achieved on all other workpieces with execution times within 4 seconds while also correcting the error to be within 1 mm.

| Workpiece | Evaluation point | Execution result |

|---|---|---|

| 10 cm plastic case | Average position error | 2.9 mm |

| Maximum position error | 4.0 mm | |

| Average execution time | 3.8 seconds | |

| Maximum execution time | 4.0 seconds | |

| 1 cm width plastic part | Average position error | 1.0 mm |

| Maximum position error | 1.0 mm | |

| Average execution time | 3.6 seconds | |

| Maximum execution time | 3.7 seconds | |

| Printed circuit board | Average position error | 0.0 mm |

| Maximum position error | 0.0 mm | |

| Average execution time | 3.6 seconds | |

| Maximum execution time | 3.9 seconds |

As for the 10 cm plastic case on which the target could not be achieved, we reduced the position errors to 1 mm at maximum by changing the parameters of speed and the allowable values at the time of placement to change the execution time to about 4.2 seconds at maximum. For these reasons, we consider the system to have generally achieved performance equivalent to a human.

5. Conclusions

In order to address the issue of increased person-hours for programming for each part, a challenge associated with existing techniques for automating the kitting process, this paper proposed an architecture in which a robot automatically converted sensor data into abstract data not depending on workpieces or work to use it for control. The kitting system created on the basis of this architecture did not require modification or adjustment of the program even when the workpiece changed, and the system was also capable of automatically performing kitting by correcting the position and orientation errors at a speed equivalent to or higher than that of a human. Although this paper only covered the automation of the kitting process, the technique of this study was considered easily applicable to automation of fitting work in the assembly process as well.

However, there remain issues in terms of autonomization and the quality of the hand for practical application. In particular, to realize autonomization, there are challenges that include automatic generation of an operation strategy that utilizes contact with the environment and the automatic setting of each parameter in the parameters for the control phase. Moreover, since the responsiveness of the position and orientation errors and high-speed capability has a trade-off relationship, parameter adjustment is required in order to balance them. The simplification of this adjustment is also an issue to be resolved in future. Going forward, we would like to tackle these issues to achieve practical application.

This achievement was obtained as a result of work (JPNP 16007) consigned by the New Energy and Industrial Technology Development Organization (NEDO).

References

- 1’╝ē

- K. Harada, ŌĆ£Research Trends on Assembly Automation by Using Industrial Robots,ŌĆØ J. Japan Soc. Prec. Eng., vol. 84, no. 4, pp. 299-302, 2018.

- 2’╝ē

- K. Koyama, Y. Suzuki, M. Aiguo, and M. Shimojo, ŌĆ£Pre-grasp Control for Various Objects by the Robot Hand Equipped with the Proximity Sensor on the Fingertip,ŌĆØ J. Robotics Soc. Japan, vol. 33, no. 9, pp. 712-722, 2015.

- 3’╝ē

- Y. Karako, S. Kawakami, K. Koyama, M. Shimojo, T. Senoo, and M. Ishikawa, ŌĆ£High-Speed Ring Insertion by Dynamic Observable Contact Hand,ŌĆØ in IEEE Int. Conf. Robotics and Automation (ICRA), 2019, pp. 2744-2750.

- 4’╝ē

- T. Tsuji, K. Harada, and K. Kaneko, ŌĆ£Fast Evaluation of Grasp Stability by Using Ellipsoidal Approximation of Friction Cone,ŌĆØ J. Robotics Soc. Japan, vol. 29, no. 3, pp. 278-287, 2011.

- 5’╝ē

- S. Doi, H. Koga, T. Seki, Y. Okuno, ŌĆ£Novel Proximity Sensor for Realizing Tactile Sense in Suction Cups,ŌĆØ in 2020 IEEE Int. Conf. Robotics and Automation (ICRA). 2020, pp. 638-643.

The names of products in the text may be trademarks of each company.