We are Shaping the Future! Showcasing Success Stories as We Innovate for a Sustainable Tomorrow

To what extent might robots be able to act as extensions of our bodies? Scientists are exploring how to integrate AI agents with A physical form and human-like senses into our lives.

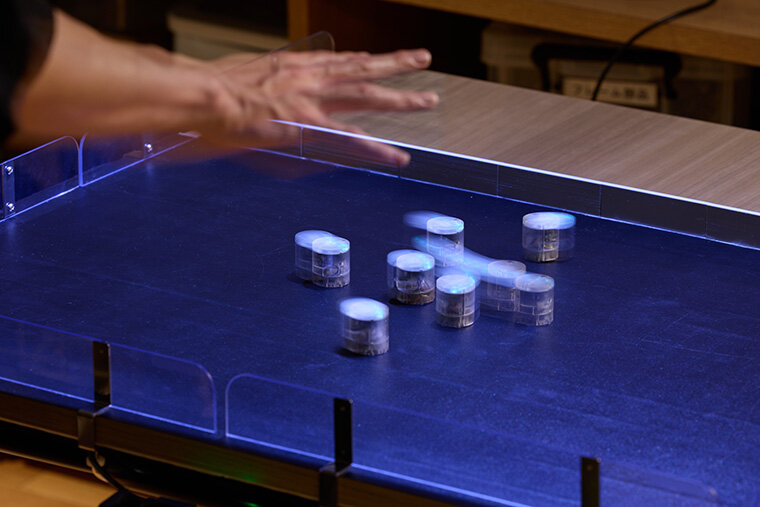

A swarm of tiny, cylindrical robots on wheels that move in sync with the wave of a hand or a flick of the finger was unveiled in Tokyo in 2024. Small, independent machines that collectively behave like schools of fish, swarm robots can arrange themselves into patterns and coordinate to manipulate larger objects.

They are examples of AI-imbued robots that might help us to further push beyond the physical boundaries of the human body. For example, a racket or violin are tools that can serve as an extension of our bodies when playing tennis or making music -- but swarms of robots might allow us to do so much more.

Shigeo Yoshida is a principal investigator in the Integrated Interaction Group at OMRON SINIC X (OSX), a Tokyo company spun off from OMRON to establish a research base for technology to meet social needs. He is extending that research by investigating whether a system as disjointed as a swarm of robots can act as an extension of the human body -- even when they are physically remote from the body.

"We're exploring what that could look like when the body part isn't a fixed object, but a fluid, shape-shifting swarm," Yoshida says.

Such human-controlled swarm robots could have diverse practical applications in the future. Yoshida envisions them transforming search-and-rescue operations. With advances in hardware, swarms could squeeze through narrow gaps inaccessible to humans and help grab rubble.

Farther in the future, medical applications could become a reality if human-controlled swarm robots become small enough to enter the body, helping surgeons remove tumours, for example.

"By mimicking the shapes and functions of human bodies and hands, swarm robots could conduct tasks such as grasping debris and removing tumours through intuitive operation," says Yoshida.

Ultimately, Yoshida aspires to create robot swarms that might surround and encapsulate our bodies. "With swarms, the shape of our bodies can change into so much more," he says. "That will give us an entirely new degree of flexibility and adaptability to the environment."

But first, he wants to study how people might perceive such swarms from a neurological perspective. Previous studies have investigated whether the brain can learn to adapt to the presence of artificial body parts connected to our nervous systems, such as an additional finger.

Yoshida wants to find out whether people would perceive robots as entirely separate from themselves or whether they could use them as extensions of our bodies like more conventional tools. "Would people recognize something as scattered as a swarm of robots as an extension of their physical bodies?" asks Yoshida. "That's a question we need to answer."

Shigeo Yoshida moving swarm robots via hand movements.

Shigeo Yoshida moving swarm robots via hand movements.

ŃĆĆ

To help it achieve its goals around the swarm robots, OSX is bringing together talent from universities, research institutes and companies, to develop AI that interacts with the world in ways that enhance wellbeing.

Yoshida believes that for people to maintain a sense of wellbeing when using AI agents, such as robot swarms, they must retain a sense of agency -- the feeling that they are driving the actions.

In a proof-of-concept study, OSX researchers found that users felt the robot swarms were an extension of their bodies and that they were in control1.

Because individual robots within a swarm are programmed to move autonomously to avoid collisions, "we need to examine how much autonomy we can give robots, while still maintaining our sense of agency," adds Yoshida. The goal is for the robots to detect and move according to peoples' intentions.

ŃĆĆ

"For AI agents to serve society, they need to interact smoothly with humans through natural language, our primary communication interface," says Atsushi Hashimoto, a principal investigator in OSX's Knowledge Computing Group.

His team developed the Vision-Language Interpreter (ViLaIn), a tool that uses large language models (LLMs) to translate vision and language inputs into structured action plans that robots can follow2. Many recent studies use blackbox LLMs to directly generate how robots should act. This approach requires verifying each time whether the robot's plan is correct and safe, requiring user's expertise in robotics and programming, the team believes.

In contrast, with ViLaIn, humans and robots only need consensus on the initial and target states. A whitebox algorithm then identifies a reliable plan to reach the target state. "This is more like how humans request tasks from each other," says Hashimoto. "We don't always focus on how the other person completes the task."

In 2024, OSX integrated ViLaIn into the ninth generation of the company's table-tennis robot. In the latest generation, table-tennis players can tell the robot what they are looking for in a practice session, such as continuing to return the ball for as long as possible.

With ViLaIn, the robot not only follows directions, but it can also make suggestions by observing a player's performance. But even as AI evolves, Hashimoto stresses that people -- not AI -- should always decide the goals. For that to happen, giving verbal instructions via human speech is key, he says. That way humans are always in the driving seat.

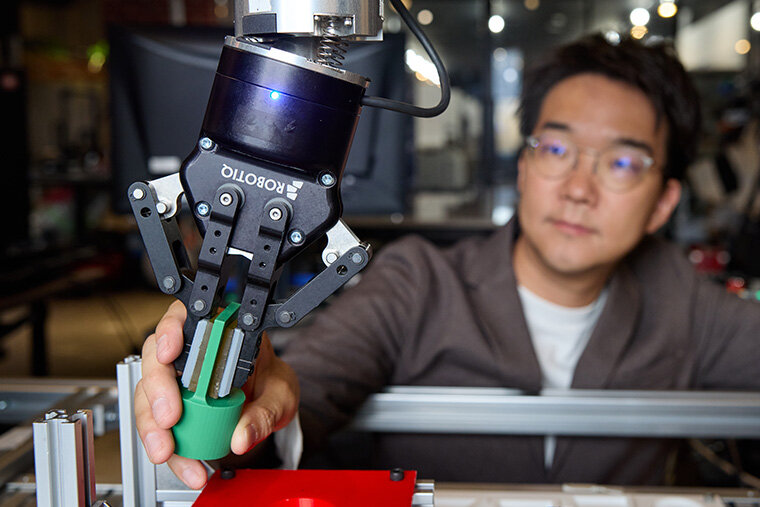

Atsushi Hashimoto operating a cooking robot.

Atsushi Hashimoto operating a cooking robot.

ŃĆĆ

In addition to the swarms research, another challenge that OSX researchers are working on is improving the performance of robots on tasks that they currently perform poorly. Robots already work in factories, automating tasks such as welding and coating components. However, a longstanding challenge in robotics has been assembly tasks, such as fitting small parts into slots.

The Robotics Group at OSX, along with university collaborators, has developed Saguri-bot, a soft robot designed to construct a small number of parts, and perform basic peg-in-hole tasks, in assembly lines3,4. Saguri-bot has soft wrist-like joints, which help absorb impacts. It also has tactile senses, which allow it to adjust its positioning by 'feeling' its way across a surface, says research organizer and the group's principal investigator, Masashi Hamaya.

Hamaya believes that soft robots will be critical to the future of manufacturing, allowing humans and robots to more safely collaborate in the same space.

"The idea of rigid robotics is still the mainstream," says Hamaya. "But soft robots can more safely share the same physical space as humans, even in the case of accidents."

The team hopes that Saguri-bot will eventually be able to handle complex shapes, and be capable of learning tasks so fast it can adapt to join new assembly lines in mere moments. The researchers are currently working with experts in other areas to improve the robot's mobility and ability to recognize parts.

"Whether it's down to their physical attributes, or the way they act, robots with an element of humanness will be the ones that humans feel most comfortable using," says Hamaya. "That will be key for humans and robots to work together harmoniously."

"At OSX, we aim to realize the evolution of AI agents with physicality and five senses," he adds.

Masashi Hamaya using a Saguri-bot to put small components into a slot.

Masashi Hamaya using a Saguri-bot to put small components into a slot.

ŃĆĆ

REFERENCES

1. Ichihashi, S. et al. Proc. 2024 CHI Conf. Hum. Fact. Comp. Syst. 267, 1-19 (2024). Article

2. Shirai, K. et al. 2024 IEEE Int. Conf. Robot. Auto. doi: 10.1109/ ICRA57147.2024.10611112

3. von Drigalski, F. et al. IEEE/RSJ Inter. Conf. Intellig. Robots & Sys. 8752-8757 (2020). Article

4. Fuchioka, Y. et al. IEEE/RSJ Inter. Conf. Intellig. Robots & Sys. 9159-9166 (2024). Article

ŃĆĆ

The contents of this article were originally produced in partnership with Nature Portfolio as an advertisement feature and published as part of the special edition Nature Index Science Inc. in Nature (nature.com), a weekly international journal publishing the finest peer-reviewed research in science and technology. This article was published on June 26, 2025.