Conference

Conference

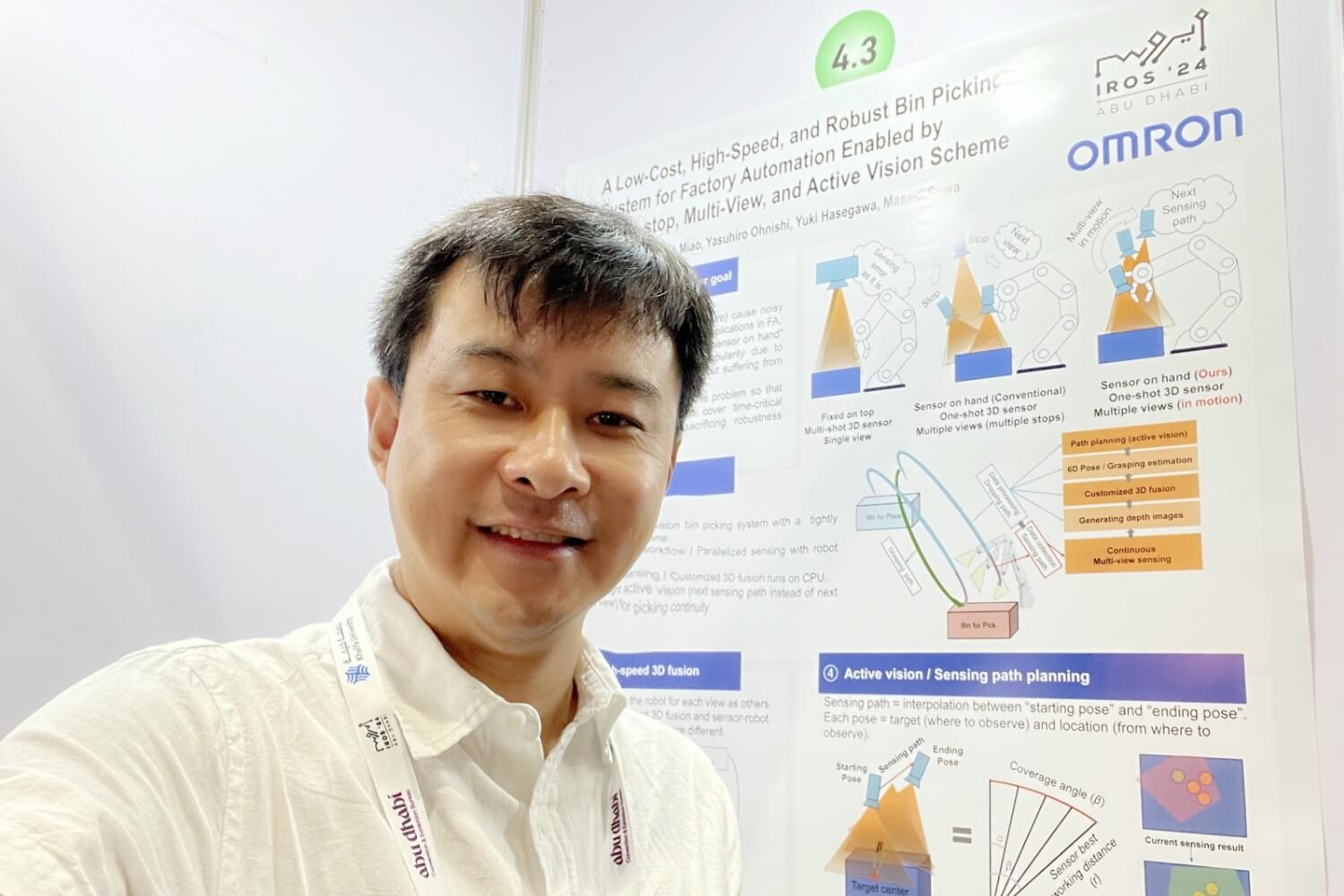

The Technology and Intellectual Property H.Q. presented 'A low-cost, high-speed, and robust bin picking system for factory automation' at IROS2024.

Feb.19,2025

The 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2024), the top-tier conference on robotics, was held in Abu Dhabi from October 14 to 18, 2024. Xingdou Fu of OMRON Technology and Intellectual Property H.Q. gave a presentation at the conference. This article introduces the research on bin picking system for factory automation. To our best knowledge, the approach is the first try to achieve high-speed active vision bin picking system in the world.

Reporter:

Xingdou Fu

Robotics R&D Center

Technology and Intellectual Property H.Q.

Technical Specialist (information engineering)

Dr. Eng.

Paper Details:

ŃĆīA Low-Cost, High-Speed, and Robust Bin Picking System for Factory Automation Enabled by a Non-stop, Multi-View, and Active Vision SchemeŃĆŹ [Paper][YouTube]

Xingdou Fu (OMRON), Lin Miao (OMRON), Yasuhiro Ohnishi (OMRON), Yuki Hasegawa (OMRON), and Masaki Suwa (OMRON)

Introduction:

This paper is published as part of our long-standing and consistent research into 3D sensors and sensing technologies. Our work can be traced back to the early 2010s, coinciding with the emergence of technologies such as Microsoft Kinect Fusion*1 and Intel┬« RealSenseŌäó *2. Over the years, we have developed various types of 3D sensors, deploying them in numerous demonstrations and products, including body scanning, industrial inspection, driver monitoring, bin-picking for logistics, and factory automation. More specifically, the research presented in this paper aims to further enhance our 3D sensor and bin-picking products (FH-SMD) for factory automation, ensuring that we continuously innovate and think ahead to create unique future solutions.

Technical Features:

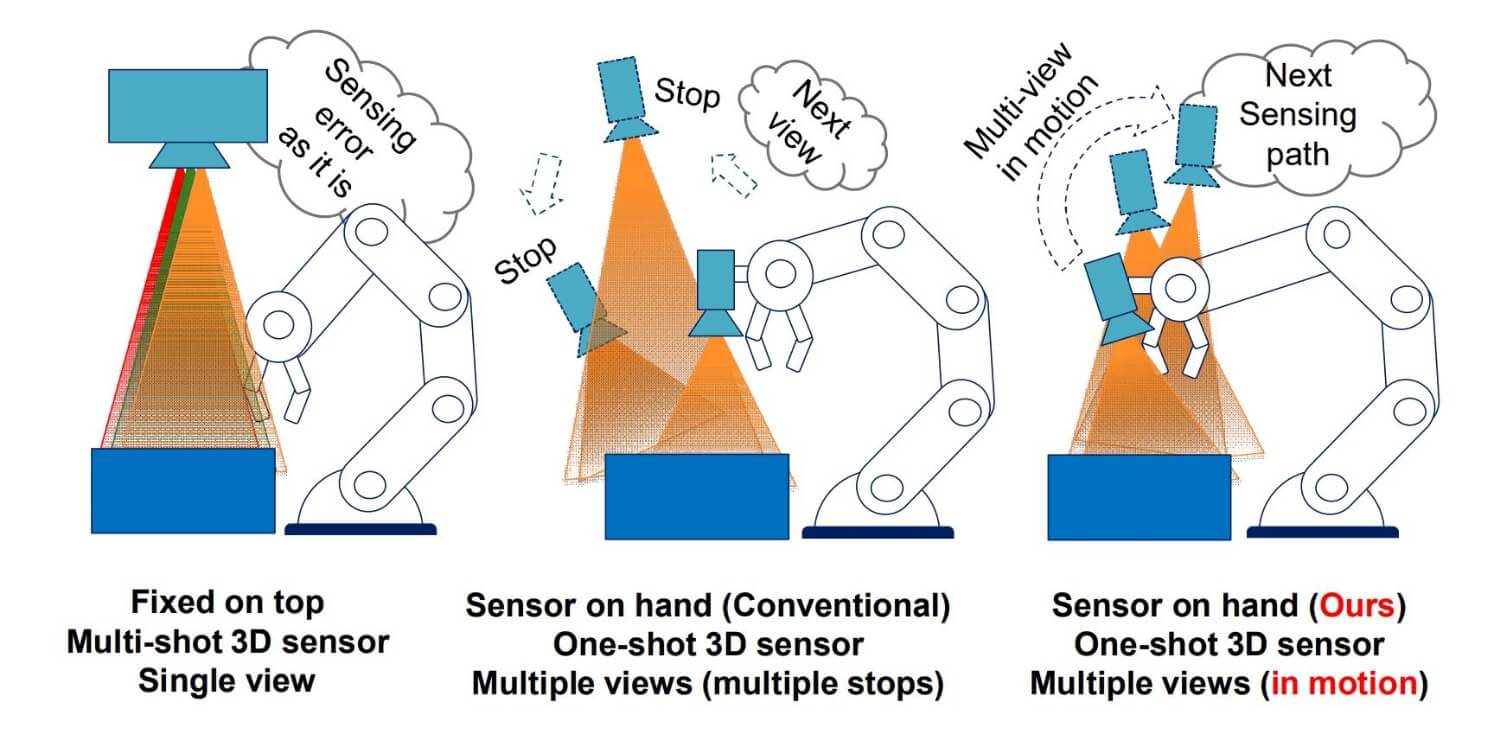

Metallic objects with specular surfaces in factory automation can cause significant reflection and inter-reflection issues for 3D sensors. To mitigate these errors in 3D data, it becomes crucial to observe target objects from multiple viewpoints. As a trend in academia, the multi-view "sensor on hand" configuration has gained popularity due to its ability to improve robustness while requiring only low-cost 3D sensors. However, all these systems suffer from low-speed issues, as they rely on moving the robot to different viewpoints. When further processing such as active vision (next best view), which is important to guarantee the picking continuity, is applied, the takt time gets worse. That explains why this kind of system is usually seen in labs. In this paper, we try to address this problem, so that low-cost, multi-view bin picking systems can also be deployed for time-critical applications in factory automation where takt time is highly appreciated.

We identified that the core issue lies in treating sensing as a decoupled module from motion tasks, without intentional design for bin-picking systems. To address this challenge, we developed a bin-picking system that tightly couples a multi-view, active vision sensing scheme with motion tasks in a "sensor on hand" configuration. This approach not only accelerates the system by parallelizing high-speed sensing with the robot's placement actions,ŃĆĆbut also determines the optimal sensing path to ensure continuity in the picking process. As a result, our solution reduced takt time by approximately 90% compared to related work, while maintaining a picking success rate above 97.75% in our experiments. To our best knowledge, our approach is the first try to achieve high-speed active vision bin picking system in the world.

The difference between our solution and others can be seen in the figure.

Reactions from IROS2024 Participants:

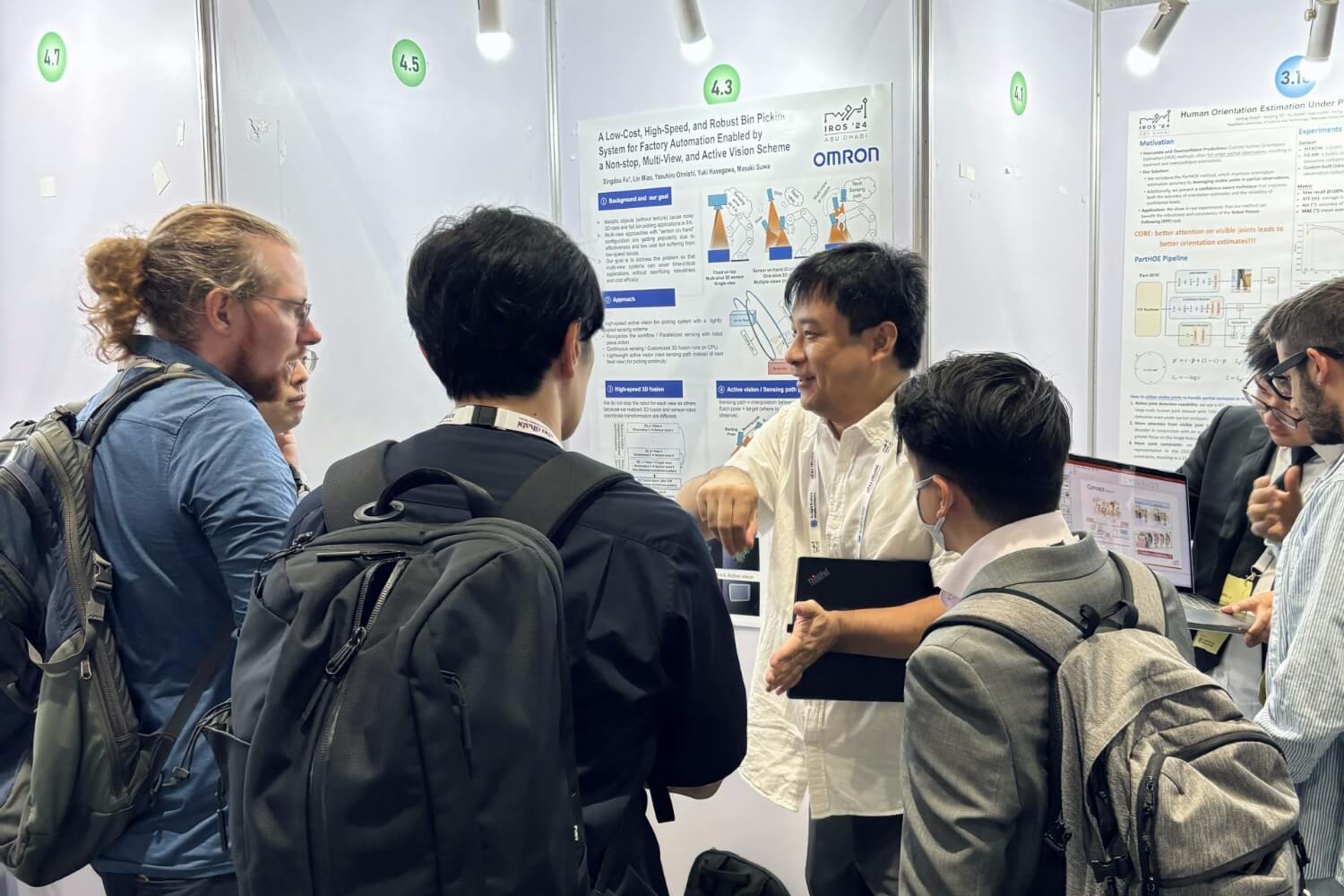

Many researchers visited our poster to learn more about our work, and nothing brings me more joy than receiving positive feedback from peers in the same field. A participant from Canada, an author of another paper on bin picking presented at IROS 2024, remarked that no one had ever proposed an idea like oursŌĆöone that achieves active vision and fast speed at the same time in a bin-picking system. We are thrilled that our work has been recognized by experts worldwide. Additionally, the organizer of "the Robotic Grasping and Manipulation Challenge (RGMC)" *3, an Italian assistant professor Salvatore D'Avella, has invited us to the picking challenge. One of the important indicators of this challenge is about picking time.

Future Technical Outlook:

As mentioned, this publication is part of our ongoing research on 3D sensors and 3D sensing technologies. It is neither the beginning nor the end of our work. After addressing the challenges of 3D sensing for metallic objects, we turned our focus to the next challenge: 3D sensing of transparent objects. As you can imagine, being able to "see" glassware in 3D for a robotŌĆöwhether in a medical lab or in daily lifeŌĆöis a crucial step toward advancing automation. To date, our sensing team have explored various approaches, including multi-view techniques, new optics, and AI-based algorithms. Fortunately, our new members have already made exciting progress that will help drive future applications in lab automation.

Project Members:

This is a team effort that reflects the diverse contributions of every member, and as the project leader, I am deeply grateful for the support from each of them. This research has been supported by in-depth technical discussions with Dr. Suwa, who has led the research and development of 3D sensing technologies for many years as Specialist (Current position: an executive officer of OMRON and senior general manager of the Technology & Intellectual Property HQ). And Mr. Ohnishi, as group leader, and Ms. Hasegawa, as Manager, made significant contributions by facilitating communication with our business partners, ensuring that the technical innovations aligned perfectly with customer needs. Ms. Miao joined the project shortly after coming to OMRON, and her work on parameter optimization and experimental analysis played a crucial role in driving the evolution of our design.

*1 KinectFusion: Real-time 3D Reconstruction and Interaction Using a Moving Depth Camera - Microsoft Research

*2 Intel┬« RealSenseŌäó Technology

*3 https://sites.google.com/view/rgmc2025